For years, the advice was "trust your eyes." But with AI models like Flux and Gemini generating photorealistic images, your eyes are no longer enough. To truly spot a deepfake, we need to look at the data underneath the pixels.

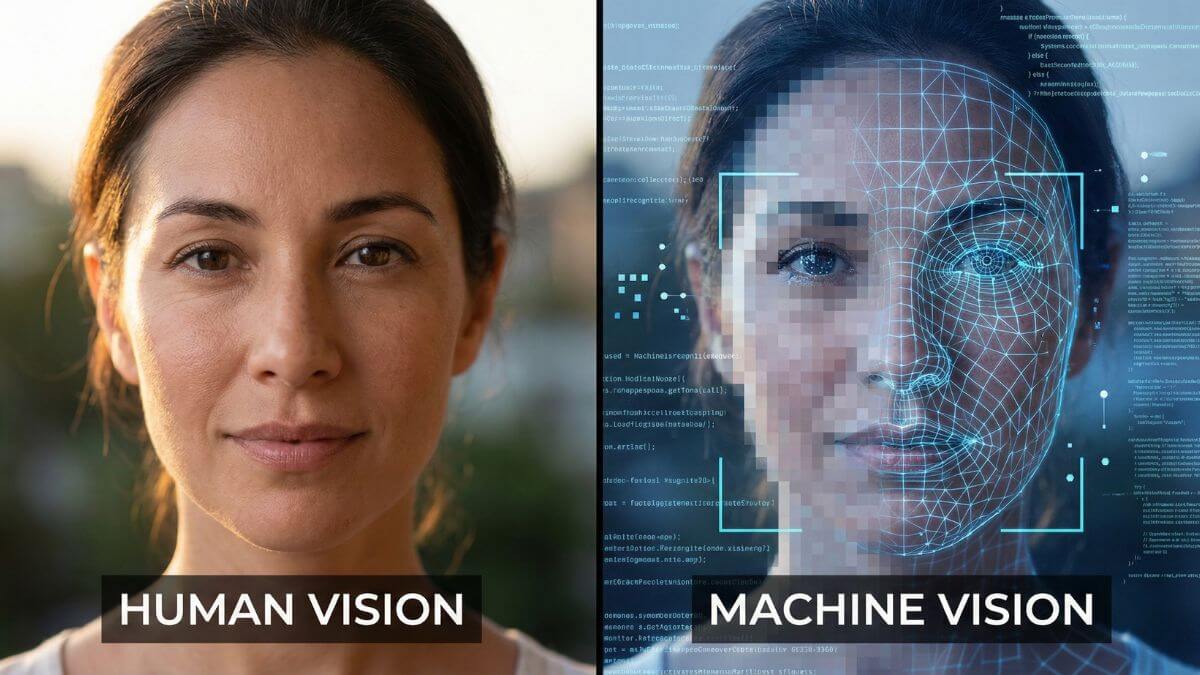

1. The Limit of Human Perception

Human brains are wired to recognize faces, not digital noise. When an AI generates a face, it often gets the "macroscopic" details (eyes, nose, mouth) right, but fails at the "microscopic" level. We might think a video looks real, but a machine sees the flaws instantly.

2. Error Level Analysis (ELA)

ELA is a forensic technique that highlights differences in compression rates. When an AI edits a face onto a body, the face and the body often have different compression levels. To our eyes, it blends in. To an ELA scanner, the fake face glows like a neon sign.

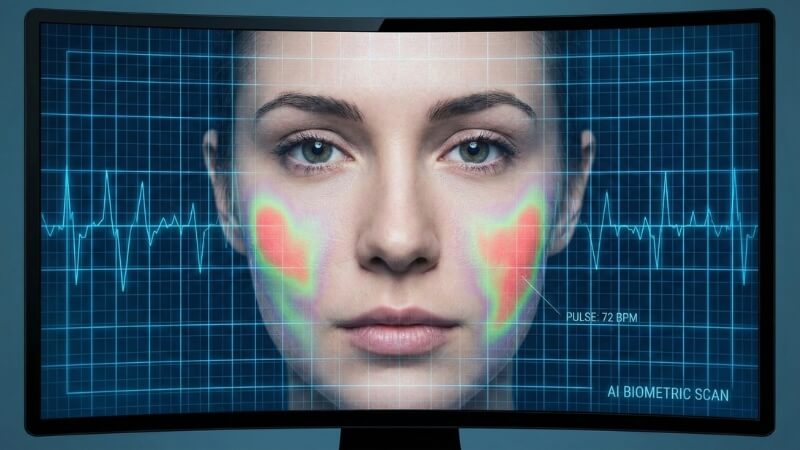

3. Biometric Inconsistencies

Real human faces flush with blood every time our heart beats. This subtle color change is invisible to us, but detectable by cameras (Photoplethysmography). Many deepfakes lack this natural "pulse," revealing them as lifeless digital puppets.

4. Metadata and Invisible Watermarks

Companies like Google (SynthID) and Adobe (C2PA) are starting to embed invisible watermarks into AI content. These aren't logos you can see; they are data patterns hidden in the noise of the image. Our tools check for these hidden signatures first.

5. The Future of Detection

As deepfakes get better, detection tools must get smarter. The future of online safety isn't about training humans to spot fakes—it's about having an "AI Bodyguard" that scans content for you before you trust it.

Test the Technology

See forensic analysis in action. Upload a file to check its digital pulse.

RUN FORENSIC SCAN